Meridian cuts through news noise by scraping hundreds of sources, analyzing stories with AI, and delivering concise, personalized daily briefs.

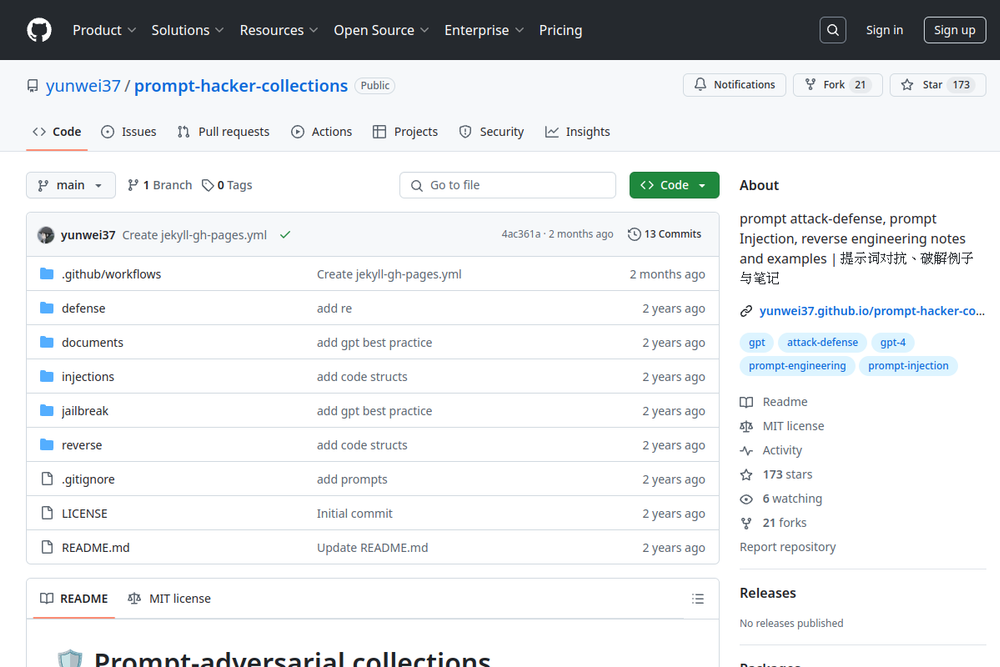

A GitHub repository containing resources on prompt attack-defense and reverse engineering techniques.

A resource for understanding adversarial prompting in LLMs and techniques to mitigate risks.

A resource for understanding prompt injection vulnerabilities in AI, including techniques and real-world examples.

A comprehensive resource for AI security tools, models, and best practices.

A comprehensive platform for AI security tools, resources, and community engagement.

A comprehensive platform for AI tools, security resources, and ethical guidelines.

This repository provides updates on the status of jailbreaking the OpenAI GPT language model.

ChatGPT DAN is a GitHub repository for jailbreak prompts that allow ChatGPT to bypass restrictions.

A dataset of 15,140 ChatGPT prompts, including 1,405 jailbreak prompts, collected from various platforms for research purposes.

A collection of harmless liberation prompts designed for AI models.