Unofficial implementation of backdooring instruction-tuned LLMs using virtual prompt injection.

Code to generate NeuralExecs for prompt injection attacks tailored for LLMs.

Discover the leaked system instructions and prompts for ChatGPT's custom GPT plugins.

A repository for benchmarking prompt injection attacks against AI models like GPT-4 and Gemini.

Fine-tuning base models to create robust task-specific models for better performance.

Implementation of the PromptCARE framework for watermark injection and verification for copyright protection.

Official implementation of StruQ, which defends against prompt injection attacks using structured queries.

A writeup for the Gandalf prompt injection game.

The official implementation of a pre-print paper on prompt injection attacks against large language models.

A benchmark for evaluating the robustness of LLMs and defenses to indirect prompt injection attacks.

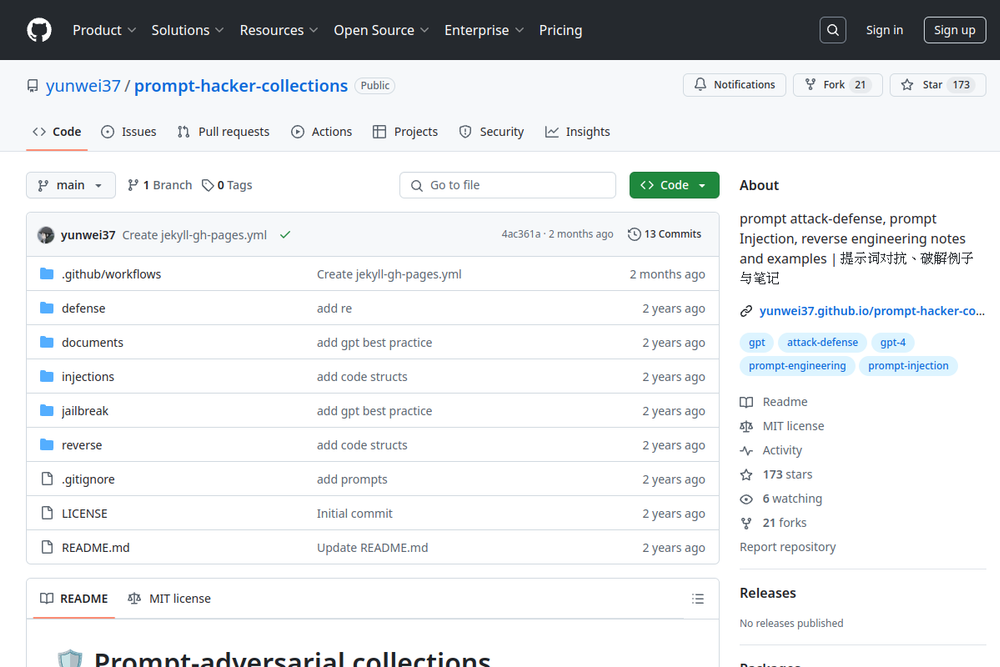

A GitHub repository containing resources on prompt attack-defense and reverse engineering techniques.