PromptBench: A Unified Library for Evaluating and Understanding Large Language Models

PromptBench is a Pytorch-based Python package designed for the evaluation of Large Language Models (LLMs). It provides user-friendly APIs for researchers to conduct evaluations on LLMs efficiently. Here are some key features and benefits:

Key Features:

- Easy Installation: Install via pip or GitHub for the latest features.

- User-Friendly APIs: Simplifies the process of evaluating existing datasets and LLMs.

- Support for Various Models: Includes support for multi-modal models and various prompt engineering methods.

- Adversarial Attacks: Integrated tools for simulating black-box adversarial prompt attacks to evaluate model robustness.

- Dynamic Evaluation: Implements DyVal for generating evaluation samples on-the-fly with controlled complexity.

- Efficient Multi-Prompt Evaluation: Uses a small sample of data to predict performance on unseen data, reducing error significantly.

Benefits:

- Quick Start: Users can quickly set up and start evaluating models with minimal configuration.

- Extensibility: Users are encouraged to add new models, datasets, and evaluation methods.

- Comprehensive Documentation: Detailed tutorials and examples are provided to help users get familiar with the library.

Highlights:

- Supports a wide range of datasets and models, including open-source and proprietary options.

- Active community contributions and a welcoming environment for enhancements.

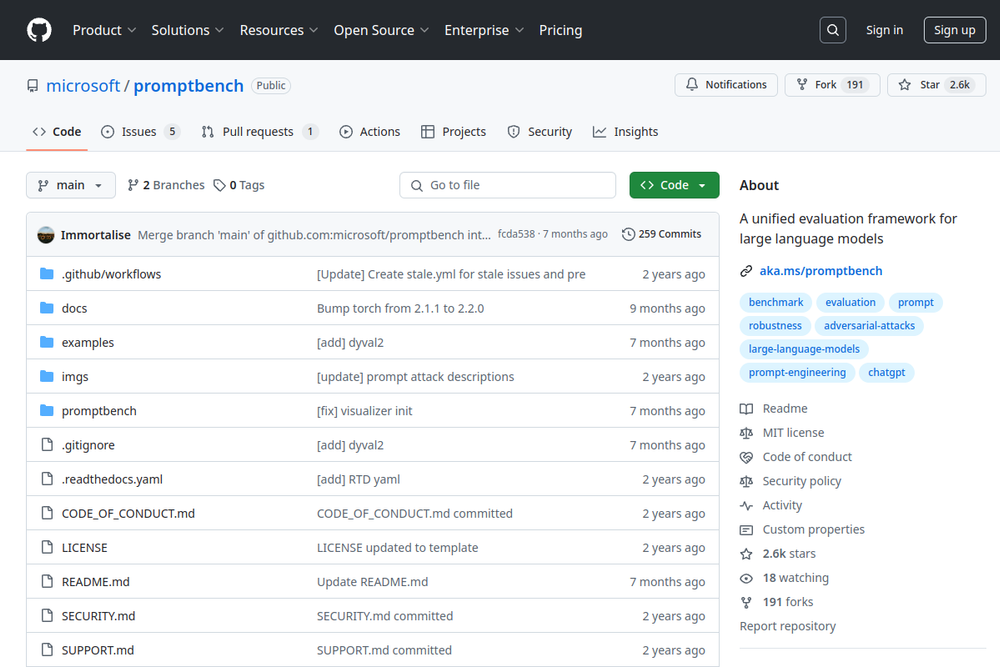

For more information, visit the GitHub repository.