The Best Your Ultimate AI Security Toolkit

Curated AI security tools & LLM safety resources for cybersecurity professionals

Curated AI security tools & LLM safety resources for cybersecurity professionals

Implementation of the PromptCARE framework for watermark injection and verification for copyright protection.

Official implementation of StruQ, which defends against prompt injection attacks using structured queries.

The official implementation of InjecGuard, a tool for benchmarking and mitigating over-defense in prompt injection guardrail models.

A writeup for the Gandalf prompt injection game.

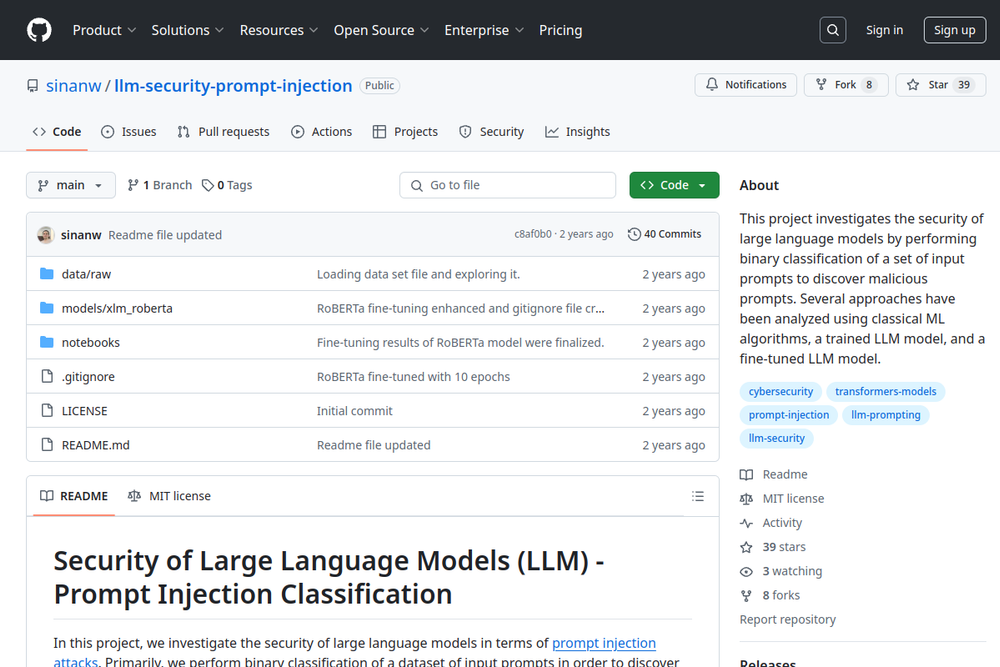

This project investigates the security of large language models by classifying prompts to discover malicious injections.

Repo for the research paper "SecAlign: Defending Against Prompt Injection with Preference Optimization"

The official implementation of a pre-print paper on prompt injection attacks against large language models.

Uses the ChatGPT model to filter out potentially dangerous user-supplied questions.

A prompt injection game to collect data for robust ML research.

A benchmark for evaluating the robustness of LLMs and defenses to indirect prompt injection attacks.

Project Mantis is a tool designed to counter LLM-driven cyberattacks using prompt injection techniques.