Vulnerability impact analyzer that reduces false positives in SCA tools by performing intelligent code analysis.

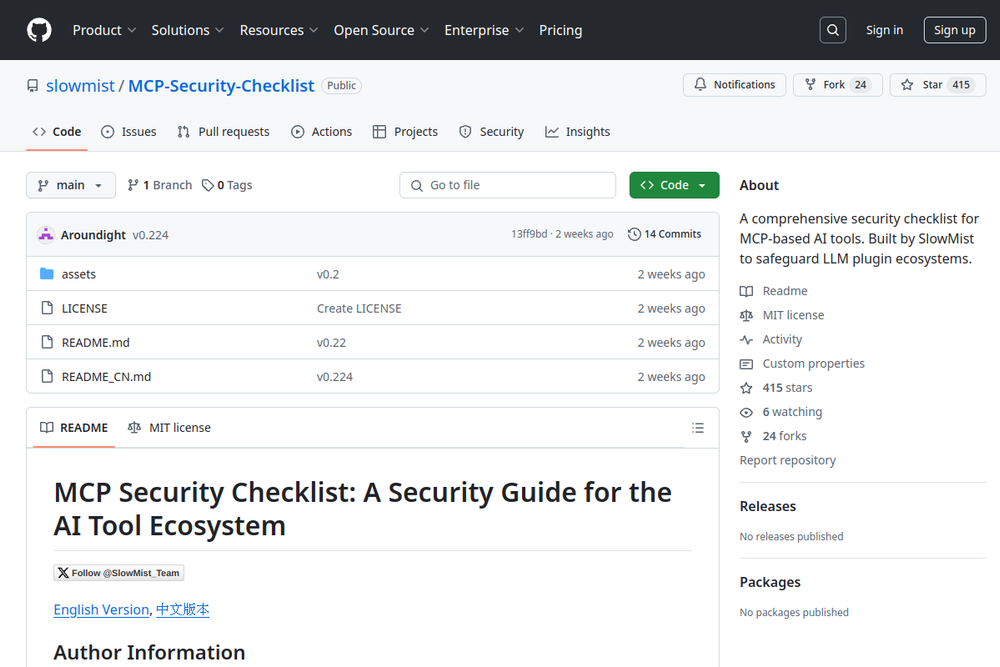

A comprehensive security checklist for MCP-based AI tools. Built by SlowMist to safeguard LLM plugin ecosystems.

A security scanning tool for MCP servers to check for common vulnerabilities.

Official GitHub repository assessing prompt injection risks in user-designed GPTs.

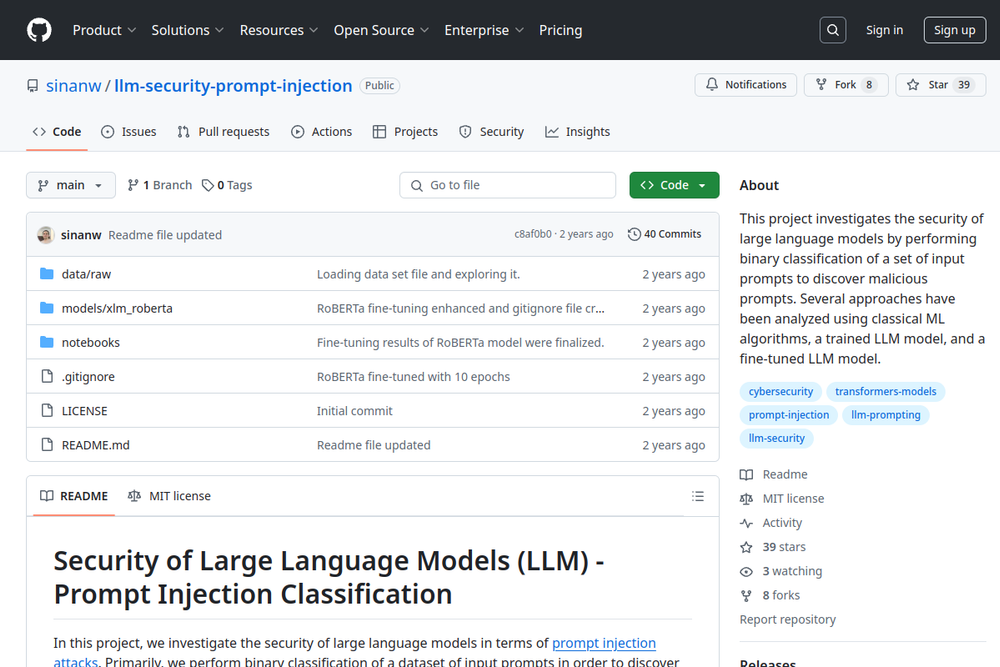

This project investigates the security of large language models by classifying prompts to discover malicious injections.

Ultra-fast, low latency LLM security solution for prompt injection and jailbreak detection.

Prompt Injection Primer for Engineers—a comprehensive guide to understanding and mitigating prompt injection vulnerabilities.