The Best Your Ultimate AI Security Toolkit

Curated AI security tools & LLM safety resources for cybersecurity professionals

Curated AI security tools & LLM safety resources for cybersecurity professionals

A steganography tool for encoding images as prompt injections for AIs with vision capabilities.

Custom node for ComfyUI enabling specific prompt injections within Stable Diffusion UNet blocks.

A benchmark for evaluating prompt injection detection systems.

Ultra-fast, low latency LLM security solution for prompt injection and jailbreak detection.

A GitHub repository showcasing various prompt injection techniques and defenses.

A practical guide to LLM hacking covering fundamentals, prompt injection, offense, and defense.

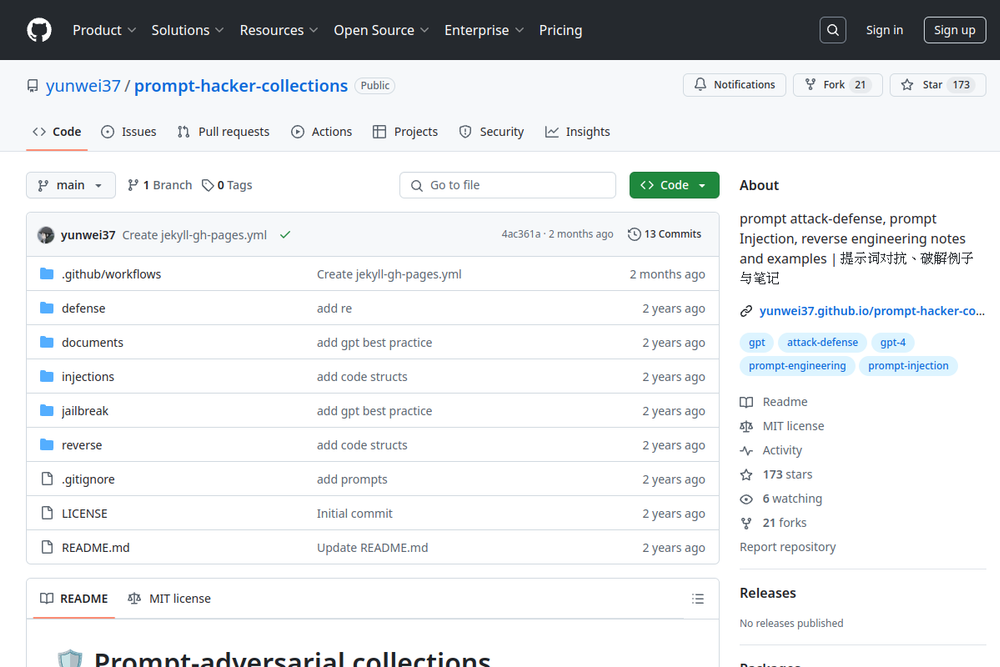

A GitHub repository containing resources on prompt attack-defense and reverse engineering techniques.

This repository provides a benchmark for prompt Injection attacks and defenses.

The automated prompt injection framework for LLM-integrated applications.

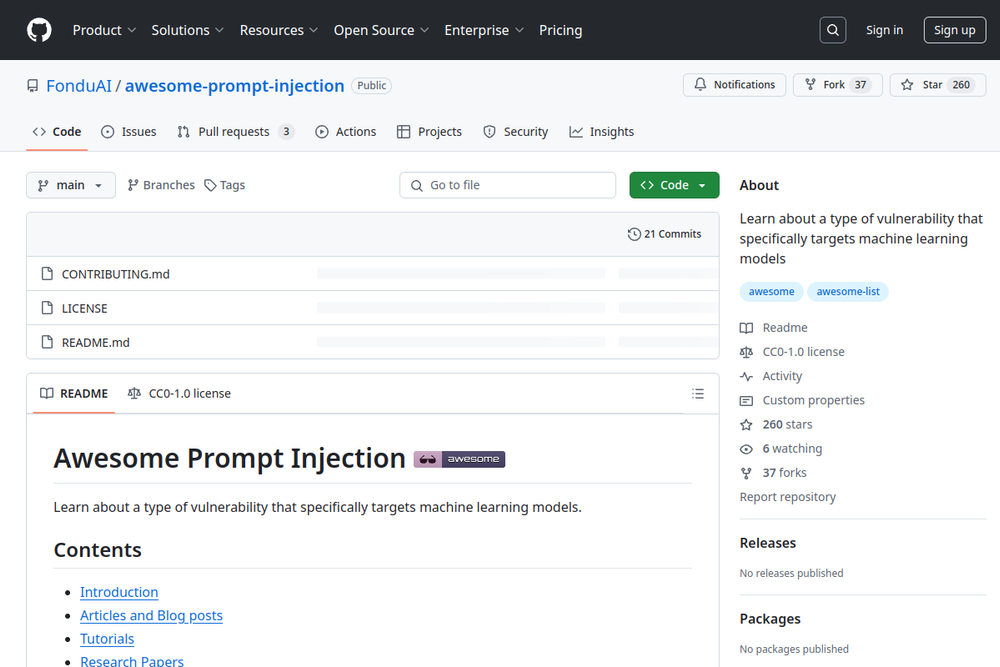

Learn about a type of vulnerability that specifically targets machine learning models.

A collection of examples for exploiting chatbot vulnerabilities using injections and encoding techniques.