A curated list of awesome AI assistants with a Telegram bot for testing.

Run your own AI cluster at home with everyday devices.

Hybrid thinking tool for efficient AI model integration in open-webui.

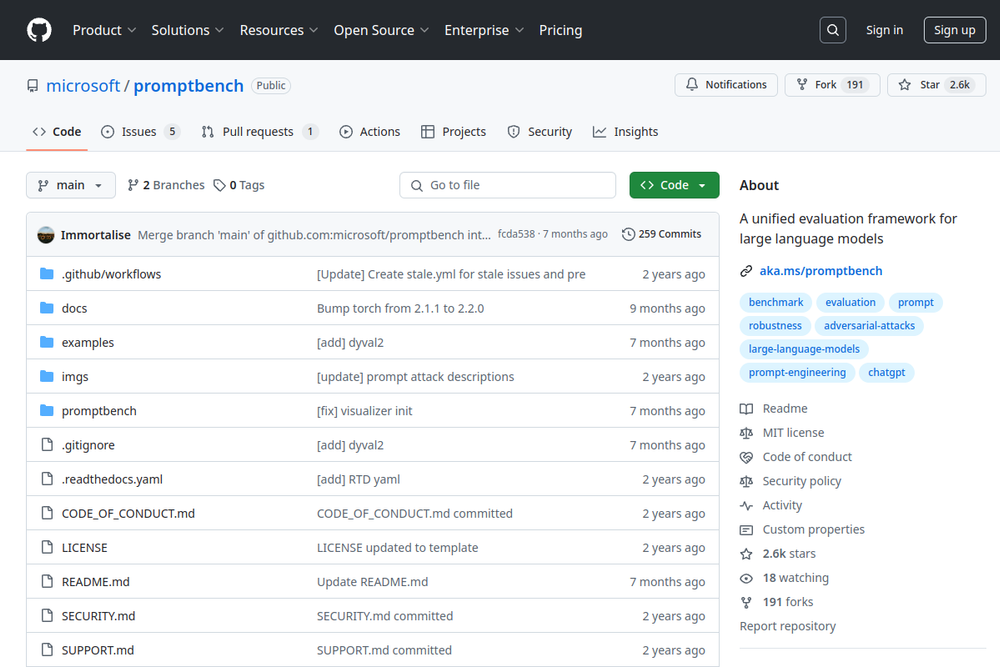

A unified evaluation framework for large language models.

AutoAudit is a large language model (LLM) designed for enhancing cybersecurity through advanced AI-driven threat detection and response.

A curated list of tools, datasets, demos, and papers for evaluating large language models (LLMs).

Sample notebooks and prompts for evaluating large language models (LLMs) and generative AI.

Evals is a framework for evaluating LLMs and LLM systems, and an open-source registry of benchmarks.

A unified toolkit for automatic evaluations of large language models (LLMs).

An open-source project for comparing two LLMs head-to-head with a given prompt, focusing on backend integration.