PFI is a system designed to prevent privilege escalation in LLM agents by enforcing trust and tracking data flow.

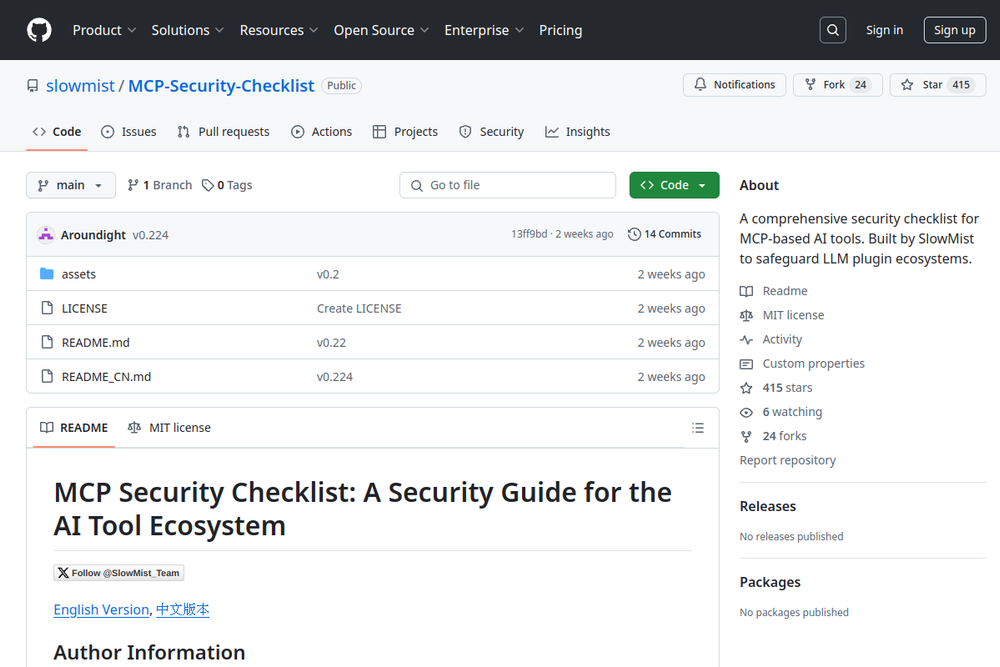

A comprehensive security checklist for MCP-based AI tools. Built by SlowMist to safeguard LLM plugin ecosystems.

A curated list of useful resources that cover Offensive AI.

A curated list of awesome security tools, experimental cases, and interesting things related to LLM or GPT.

Breaker AI is an open-source CLI tool for security checks on LLM prompts.

Framework for testing vulnerabilities of large language models (LLM).

Curated reading list for adversarial perspective and robustness in deep reinforcement learning.

Breaker AI is a CLI tool that detects prompt injection risks and vulnerabilities in AI prompts.

Red AI Range (RAR) is a security platform for AI red teaming and vulnerability assessment using Docker.

A CLI that provides a generic automation layer for assessing the security of ML models.

A Python library designed to enhance machine learning security against adversarial threats.

AgentFence is an open-source platform for automatically testing AI agent security, identifying vulnerabilities like prompt injection and secret leakage.