A novel approach to hacking AI assistants using Unicode Tags to bypass security measures in large language models.

GitHub repository for techniques to prevent prompt injection in AI chatbots using LLMs.

Automatic Prompt Injection testing tool that automates the detection of prompt injection vulnerabilities in AI agents.

A GitHub repository for testing prompt injection techniques and developing defenses against them.

Official GitHub repository assessing prompt injection risks in user-designed GPTs.

Easy to use LLM Prompt Injection Detection / Detector Python Package.

Application which investigates defensive measures against prompt injection attacks on LLMs, focusing on external tool exposure.

Short list of indirect prompt injection attacks for OpenAI-based models.

Manual Prompt Injection / Red Teaming Tool for large language models.

Implementation of the PromptCARE framework for watermark injection and verification for copyright protection.

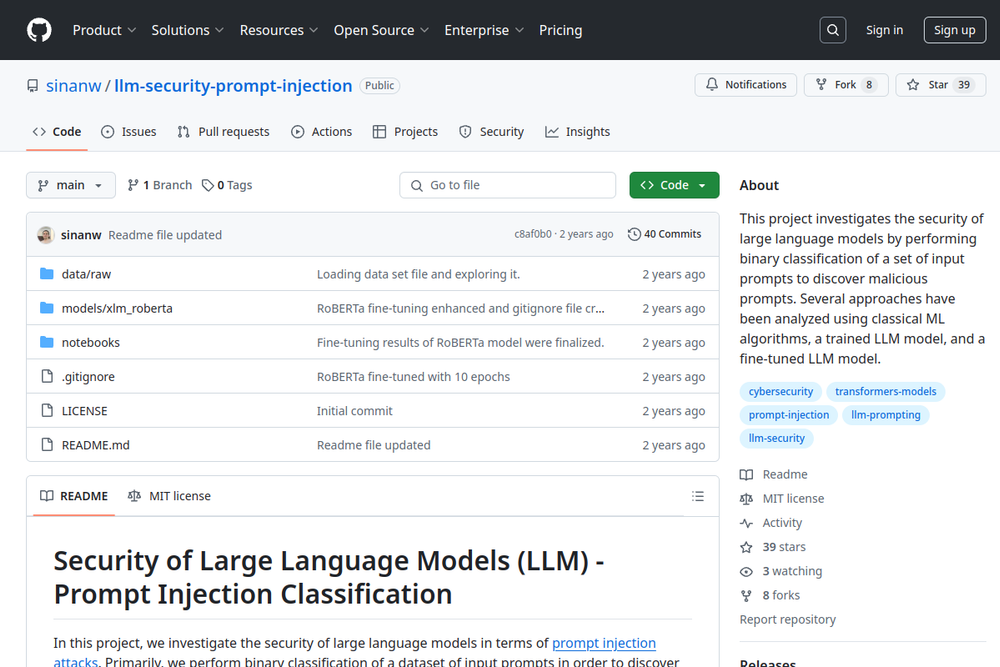

This project investigates the security of large language models by classifying prompts to discover malicious injections.