This research proposes defense strategies against prompt injection in large language models to improve their robustness and security against unwanted outputs.

GitHub repository for techniques to prevent prompt injection in AI chatbots using LLMs.

A Agentic LLM CTF to test prompt injection attacks and preventions.

A GitHub repository for testing prompt injection techniques and developing defenses against them.

A multi-layer defence to protect applications against prompt injection attacks.

GitHub repository for optimization-based prompt injection attacks on LLMs as judges.

Code to generate NeuralExecs for prompt injection attacks tailored for LLMs.

A repository for benchmarking prompt injection attacks against AI models like GPT-4 and Gemini.

Guard your LangChain applications against prompt injection with Lakera ChainGuard.

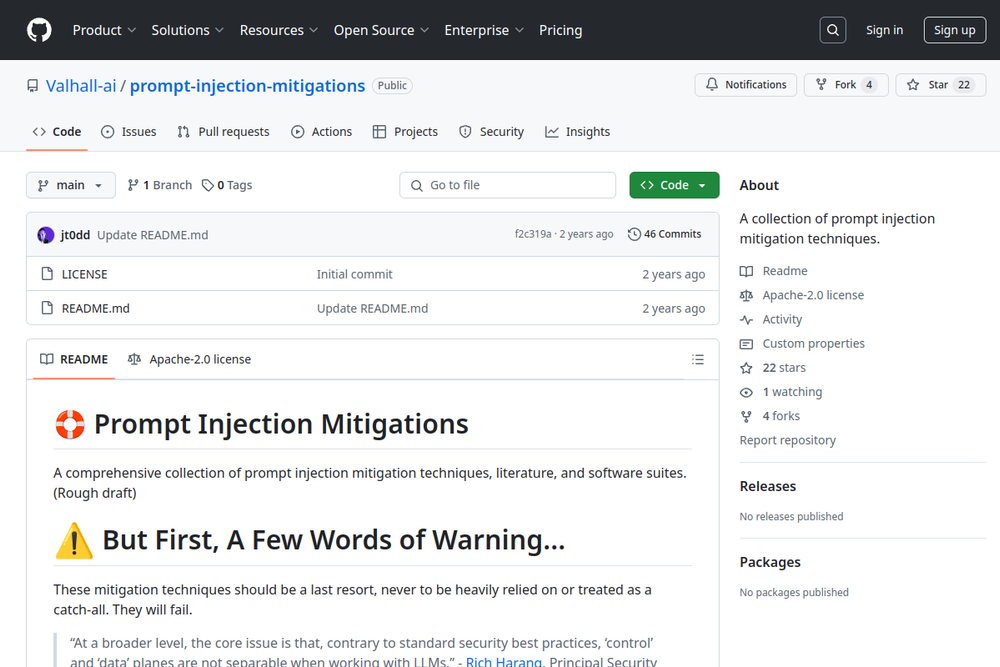

A collection of prompt injection mitigation techniques.

Official GitHub repository assessing prompt injection risks in user-designed GPTs.