A steganography tool for encoding images as prompt injections for AIs with vision capabilities.

A benchmark for evaluating prompt injection detection systems.

Ultra-fast, low latency LLM security solution for prompt injection and jailbreak detection.

A GitHub repository showcasing various prompt injection techniques and defenses.

A practical guide to LLM hacking covering fundamentals, prompt injection, offense, and defense.

This repository provides a benchmark for prompt Injection attacks and defenses.

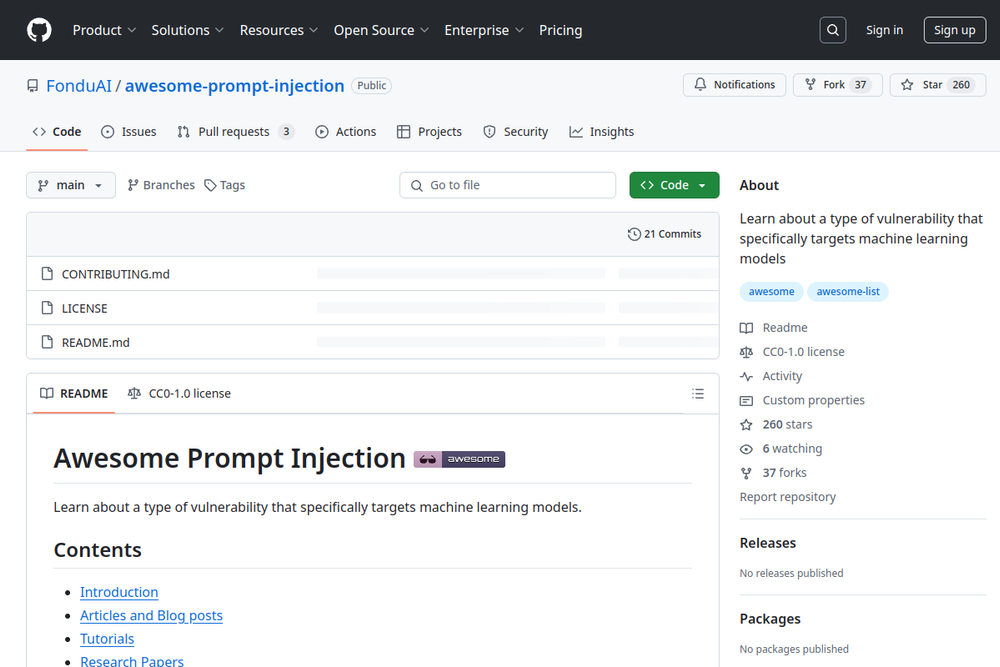

Learn about a type of vulnerability that specifically targets machine learning models.

Every practical and proposed defense against prompt injection.

Prompt Injection Primer for Engineers—a comprehensive guide to understanding and mitigating prompt injection vulnerabilities.

LLM Prompt Injection Detector designed to protect AI applications from prompt injection attacks.

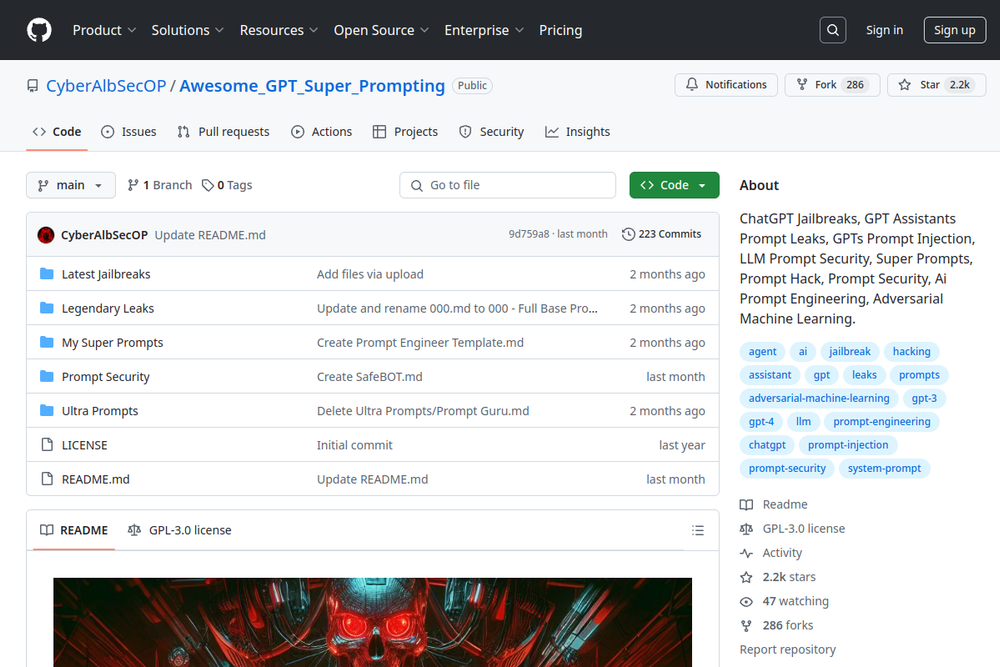

Explore ChatGPT jailbreaks, prompt leaks, injection techniques, and tools focused on LLM security and prompt engineering.

A resource for understanding adversarial prompting in LLMs and techniques to mitigate risks.