Repository accompanying a paper on Red-Teaming for Large Language Models (LLMs).

A comprehensive security platform designed for AI red teaming and vulnerability assessment.

A toolkit demonstrating security vulnerabilities in MCP frameworks through various attack vectors, for educational purposes.

sqlmap is a powerful tool for detecting and exploiting SQL injection flaws in web applications.

A Agentic LLM CTF to test prompt injection attacks and preventions.

Unofficial implementation of backdooring instruction-tuned LLMs using virtual prompt injection.

Official GitHub repository assessing prompt injection risks in user-designed GPTs.

Manual Prompt Injection / Red Teaming Tool for large language models.

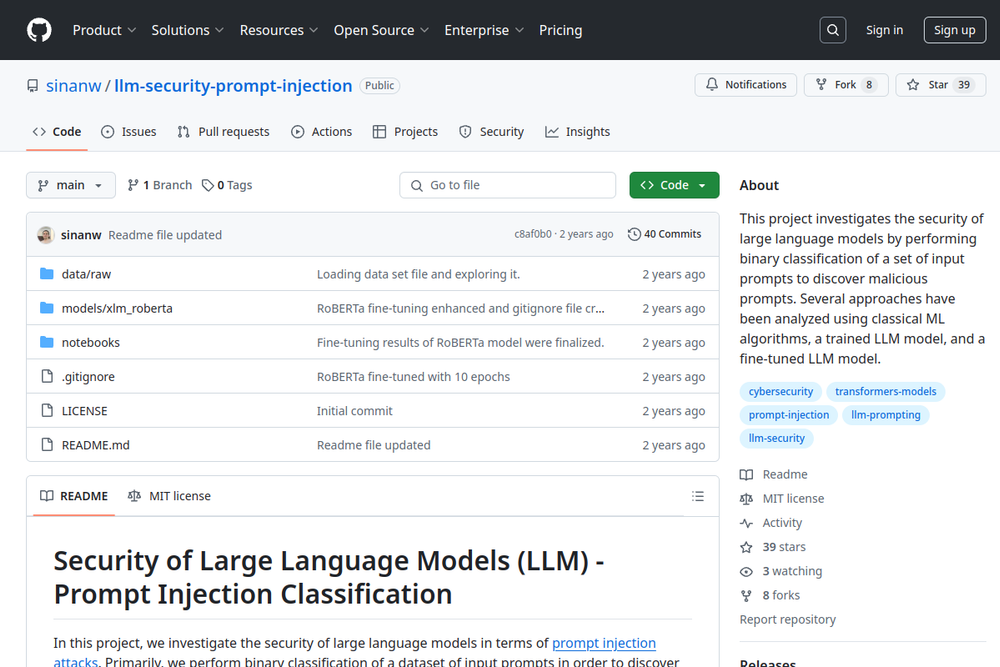

This project investigates the security of large language models by classifying prompts to discover malicious injections.

A benchmark for evaluating the robustness of LLMs and defenses to indirect prompt injection attacks.