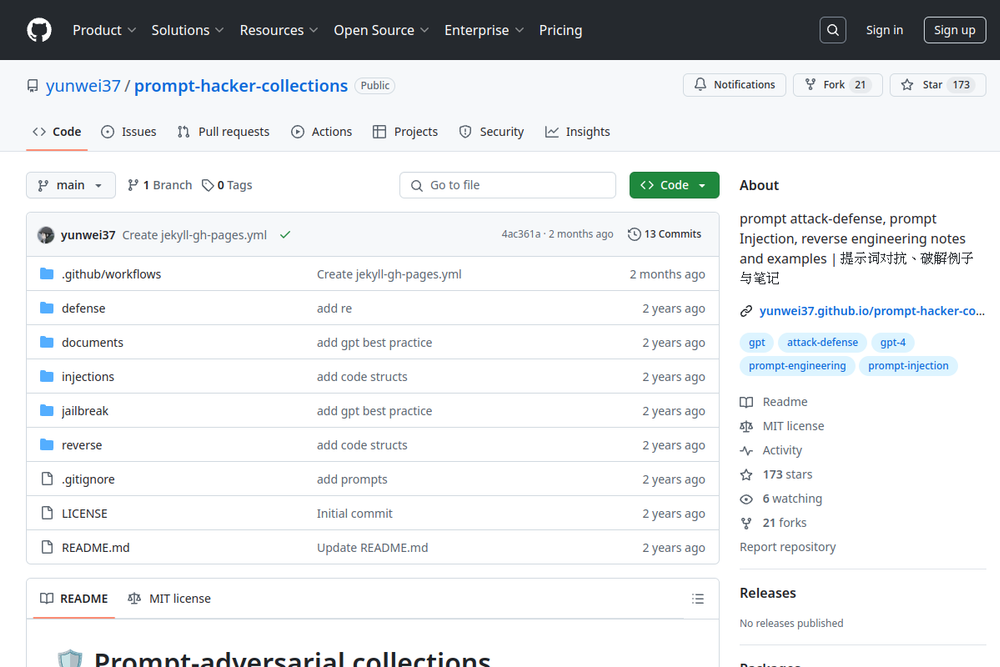

A GitHub repository containing resources on prompt attack-defense and reverse engineering techniques.

The automated prompt injection framework for LLM-integrated applications.

Vigil is a security scanner for detecting prompt injections and other risks in Large Language Model inputs.

JailBench is a comprehensive Chinese dataset for assessing jailbreak attack risks in large language models.

The most comprehensive directory of blockchain data resources on the internet.

Bugcrowd is a platform that connects businesses with ethical hackers to identify and fix security vulnerabilities.

A blog featuring insights on offensive security, technical advisories, and research by Bishop Fox.

Learn about a type of vulnerability that specifically targets machine learning models.