A Python library designed to enhance machine learning security against adversarial threats.

A Python toolbox to create adversarial examples that fool neural networks in PyTorch, TensorFlow, and JAX.

An adversarial example library for constructing attacks, building defenses, and benchmarking both.

AgentFence is an open-source platform for automatically testing AI agent security, identifying vulnerabilities like prompt injection and secret leakage.

The official implementation of InjecGuard, a tool for benchmarking and mitigating over-defense in prompt injection guardrail models.

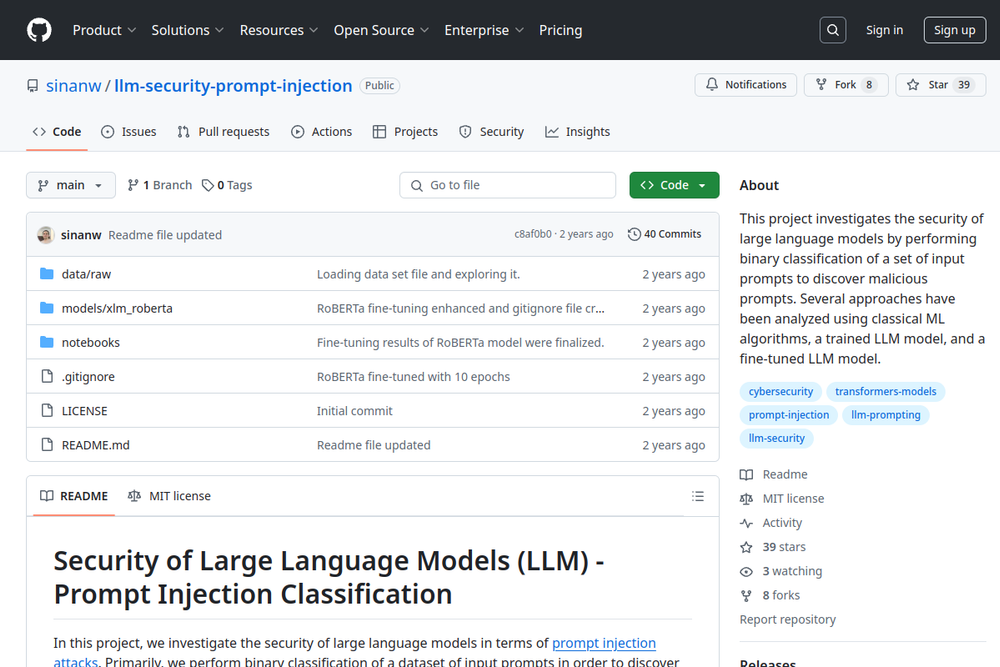

This project investigates the security of large language models by classifying prompts to discover malicious injections.

Repo for the research paper "SecAlign: Defending Against Prompt Injection with Preference Optimization"

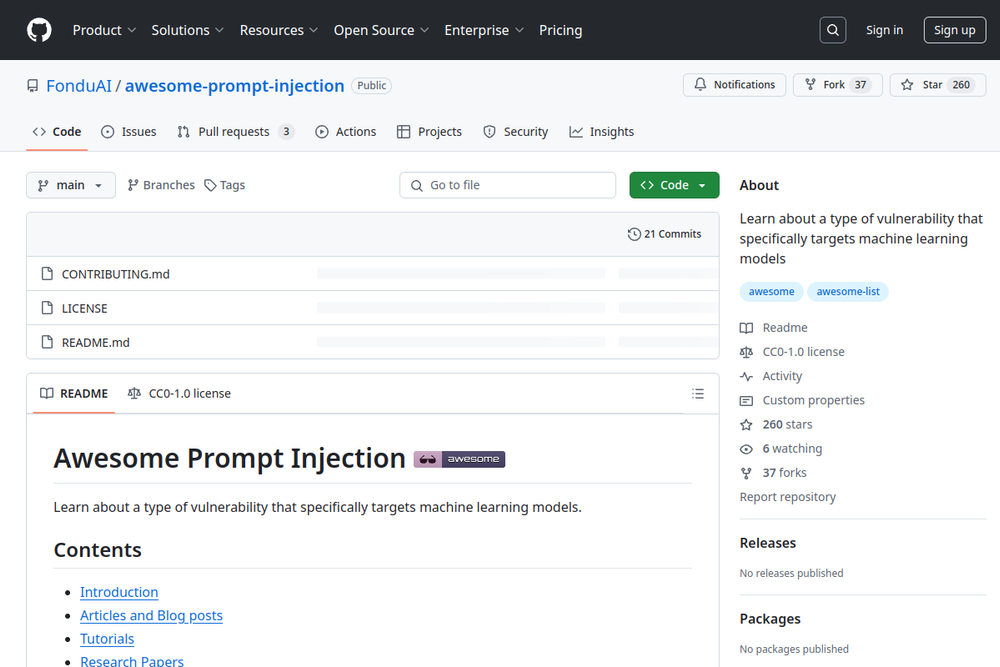

Learn about a type of vulnerability that specifically targets machine learning models.

This paper discusses new methods for generating transferable adversarial attacks on aligned language models, improving LLM security.

A resource for understanding adversarial prompting in LLMs and techniques to mitigate risks.

A plug-and-play AI red teaming toolkit to simulate adversarial attacks on machine learning models.