A PyTorch adversarial library for attack and defense methods on images and graphs.

An adversarial example library for constructing attacks, building defenses, and benchmarking both.

Manual Prompt Injection / Red Teaming Tool for large language models.

Uses the ChatGPT model to filter out potentially dangerous user-supplied questions.

A benchmark for evaluating the robustness of LLMs and defenses to indirect prompt injection attacks.

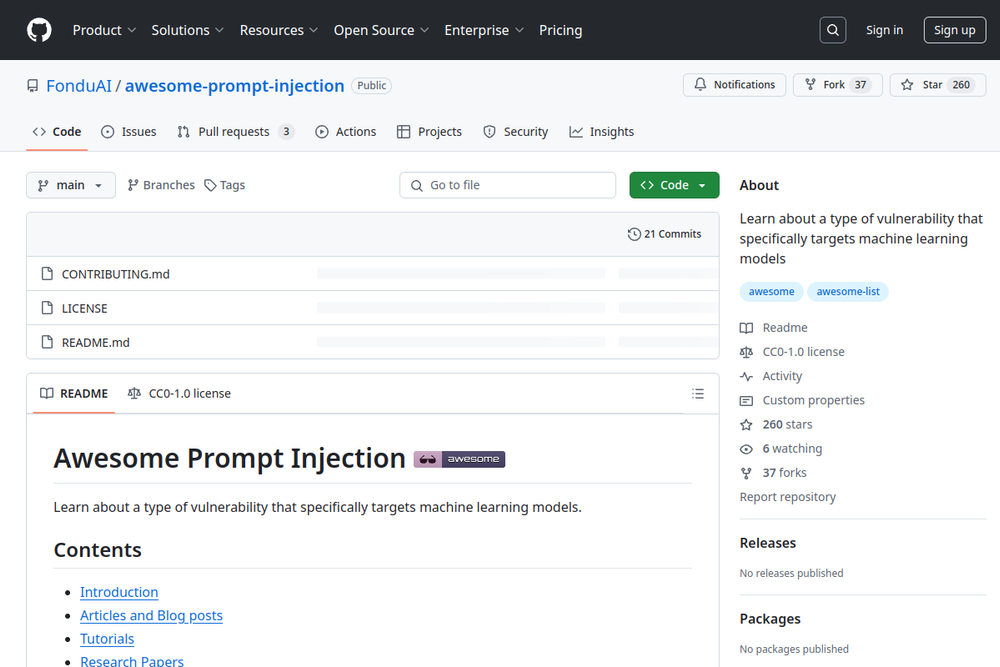

Learn about a type of vulnerability that specifically targets machine learning models.

This paper discusses new methods for generating transferable adversarial attacks on aligned language models, improving LLM security.

A comprehensive overview of prompt injection vulnerabilities and potential solutions in AI applications.

A blog discussing prompt injection vulnerabilities in large language models (LLMs) and their implications.

A resource for understanding prompt injection vulnerabilities in AI, including techniques and real-world examples.