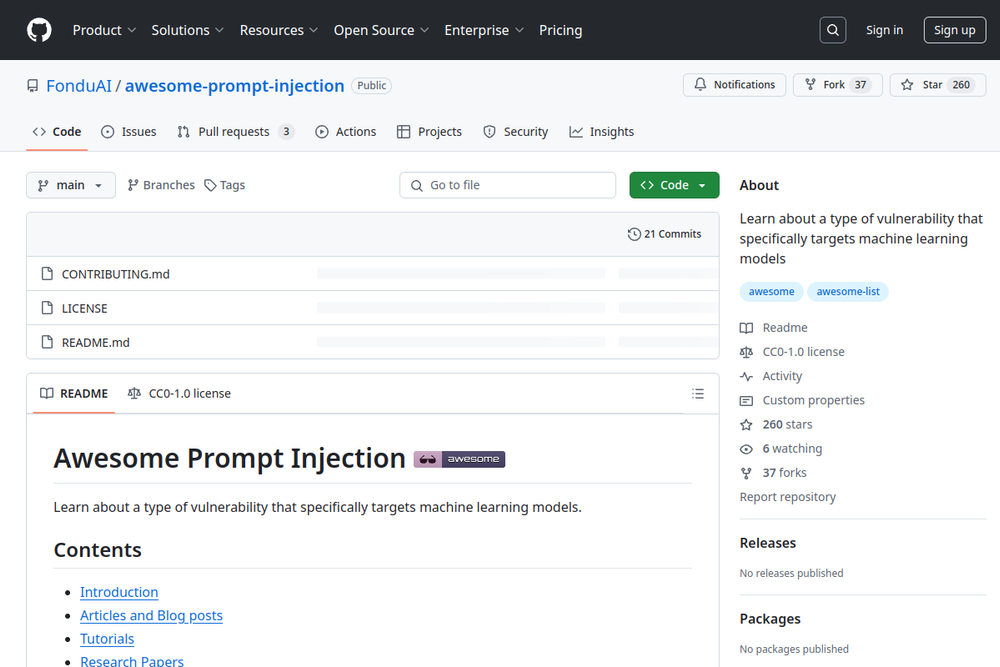

Learn about a type of vulnerability that specifically targets machine learning models.

Every practical and proposed defense against prompt injection.

Explore Prompt Injection Attacks on AI Tools such as ChatGPT with techniques and mitigation strategies.

A resource for understanding prompt injection vulnerabilities in AI, including techniques and real-world examples.

Integration that connects BloodHound with AI through Model Context Protocol for analyzing Active Directory attack paths.

A comprehensive security checklist for MCP-based AI tools to safeguard LLM plugin ecosystems.

A security verification tool by Vercel that checks browser settings for continued access.

Website blocked by Cloudflare due to security measures, indicating potential online threats or attacks.

Open-source LLM Vulnerability Scanner for safe and reliable AI.

A comprehensive resource for AI security tools, models, and best practices.