Tag

Explore by tags

genaiscript

Automatable GenAI Scripting for programmatically assembling prompts for LLMs using JavaScript.

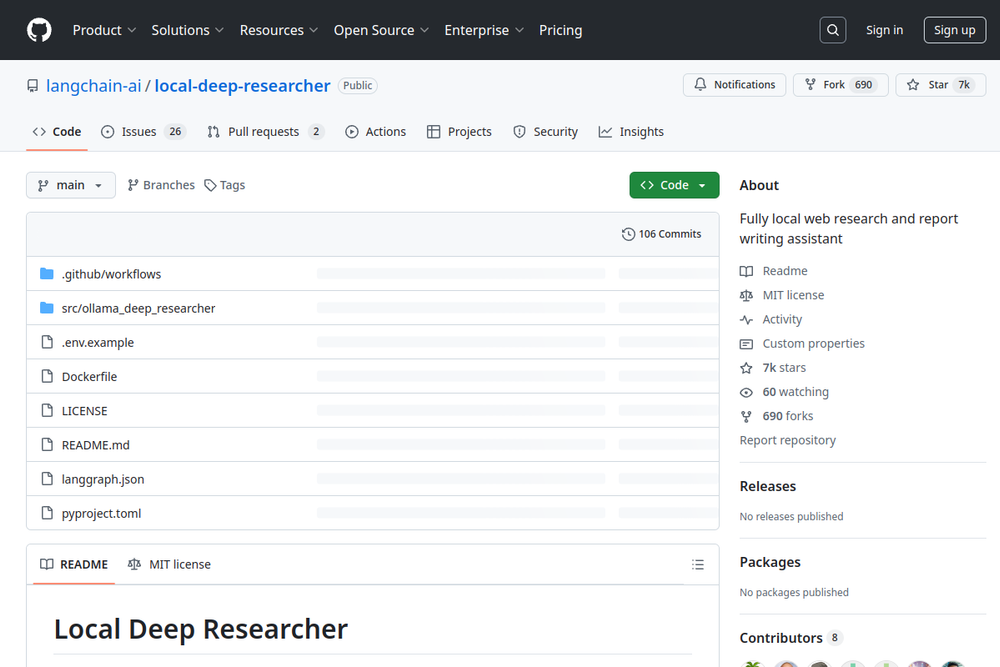

Local Deep Researcher

Fully local web research assistant using LLMs for generating queries, summarizing results, and writing reports.

obsidian-copilot

THE Copilot in Obsidian is an open-source AI assistant integrated into Obsidian for enhanced note-taking and knowledge management.

ollama-mcp-bridge

Bridge between Ollama and MCP servers, enabling local LLMs to use Model Context Protocol tools.

whatsapp-mcp

WhatsApp MCP server enabling users to interact with WhatsApp messages, contacts, and media through an MCP interface.

llm-server-docs

Documentation on setting up an LLM server on Debian from scratch, using Ollama/vLLM, Open WebUI, OpenedAI Speech/Kokoro FastAPI, and ComfyUI.

ktransformers

A flexible framework for optimizing local deployments of large language models with cutting-edge inference techniques.

LLMFarm

LLMFarm is an iOS and MacOS app for offline use of large language models using the GGML library.

EdgePersona

EdgePersona is a fully localized intelligent digital human that runs offline with low computational requirements.

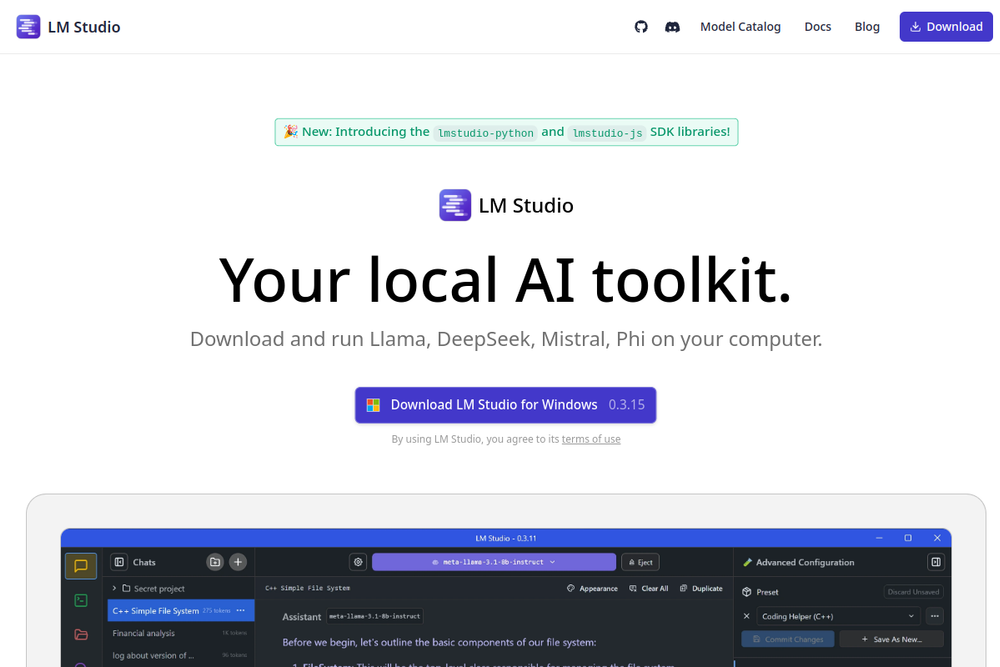

LM Studio

Discover, download, and run local LLMs like Llama and DeepSeek on your computer easily.