A GitHub repository for testing prompt injection techniques and developing defenses against them.

GitHub repository for optimization-based prompt injection attacks on LLMs as judges.

Code to generate NeuralExecs for prompt injection attacks tailored for LLMs.

A repository for benchmarking prompt injection attacks against AI models like GPT-4 and Gemini.

Guard your LangChain applications against prompt injection with Lakera ChainGuard.

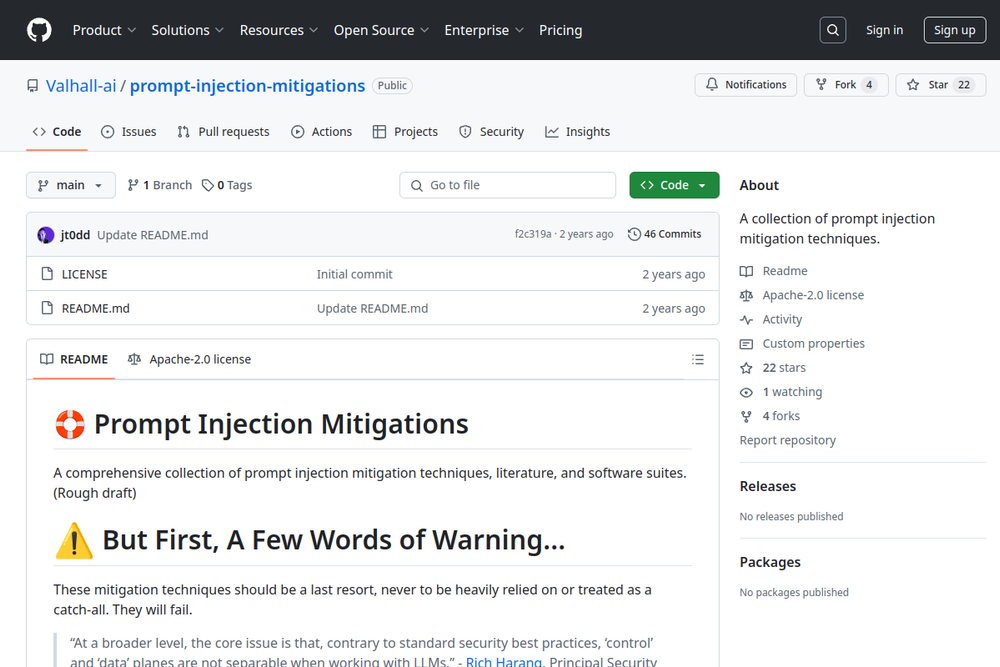

A collection of prompt injection mitigation techniques.

Official GitHub repository assessing prompt injection risks in user-designed GPTs.

Easy to use LLM Prompt Injection Detection / Detector Python Package.

Application which investigates defensive measures against prompt injection attacks on LLMs, focusing on external tool exposure.

Manual Prompt Injection / Red Teaming Tool for large language models.

Fine-tuning base models to create robust task-specific models for better performance.

This repository contains the official code for the paper on prompt injection and parameterization.