A practical guide to LLM hacking covering fundamentals, prompt injection, offense, and defense.

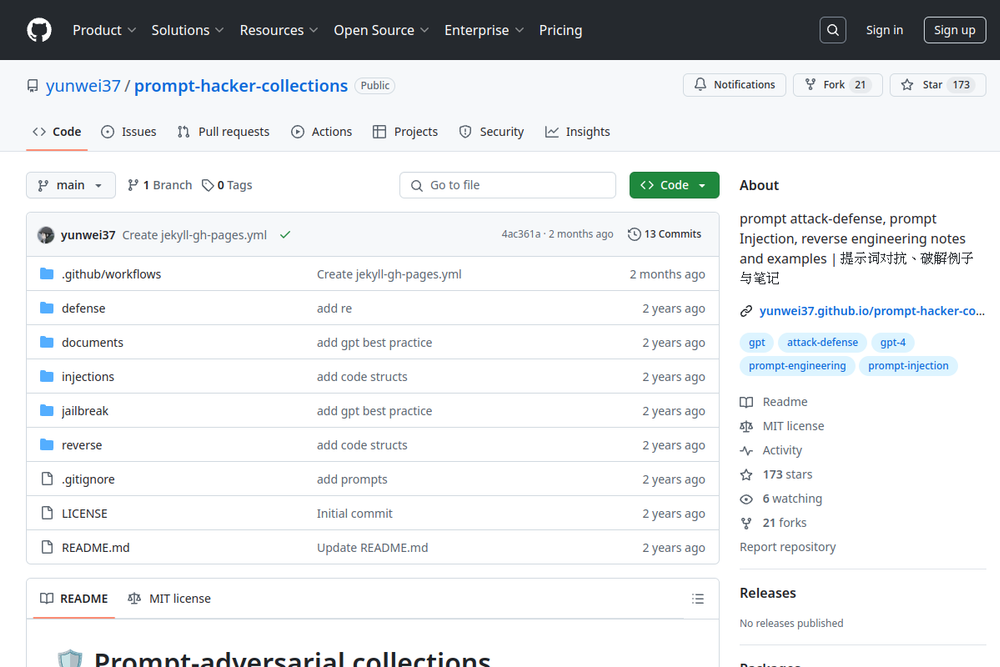

A GitHub repository containing resources on prompt attack-defense and reverse engineering techniques.

This repository provides a benchmark for prompt Injection attacks and defenses.

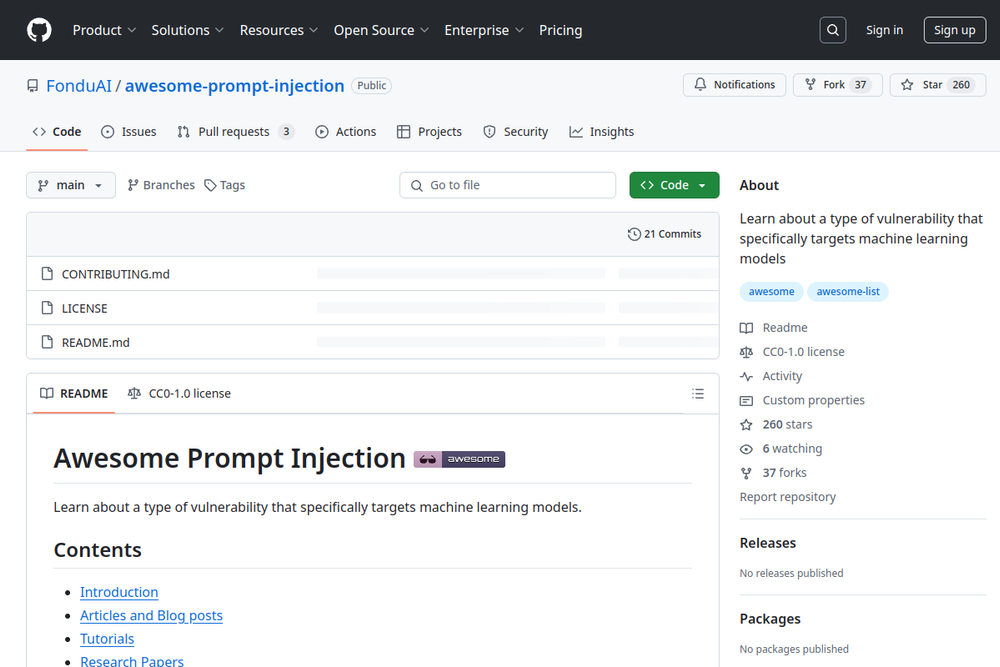

Learn about a type of vulnerability that specifically targets machine learning models.

A collection of examples for exploiting chatbot vulnerabilities using injections and encoding techniques.

Vigil is a security scanner for detecting prompt injections and other risks in Large Language Model inputs.

Every practical and proposed defense against prompt injection.

LLM Prompt Injection Detector designed to protect AI applications from prompt injection attacks.

A collection of GPT system prompts and various prompt injection/leaking knowledge.

This paper discusses new methods for generating transferable adversarial attacks on aligned language models, improving LLM security.

A resource for understanding adversarial prompting in LLMs and techniques to mitigate risks.