Guard your LangChain applications against prompt injection with Lakera ChainGuard.

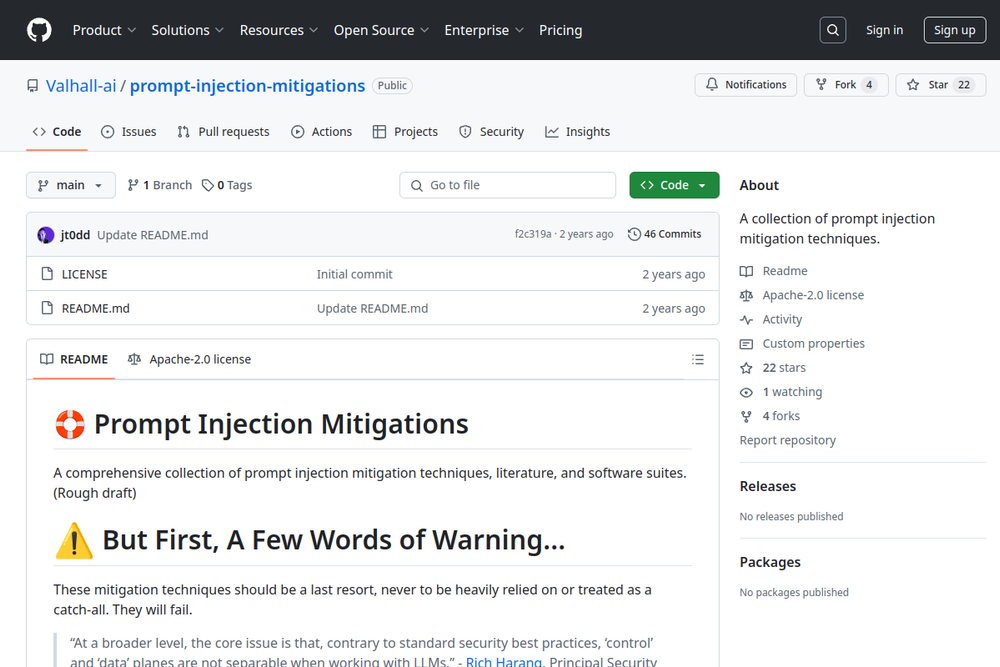

A collection of prompt injection mitigation techniques.

Official GitHub repository assessing prompt injection risks in user-designed GPTs.

Easy to use LLM Prompt Injection Detection / Detector Python Package.

Application which investigates defensive measures against prompt injection attacks on LLMs, focusing on external tool exposure.

Short list of indirect prompt injection attacks for OpenAI-based models.

Manual Prompt Injection / Red Teaming Tool for large language models.

Fine-tuning base models to create robust task-specific models for better performance.

This repository contains the official code for the paper on prompt injection and parameterization.

Official implementation of StruQ, which defends against prompt injection attacks using structured queries.

The official implementation of InjecGuard, a tool for benchmarking and mitigating over-defense in prompt injection guardrail models.

A writeup for the Gandalf prompt injection game.