LLM Prompt Injection Detector designed to protect AI applications from prompt injection attacks.

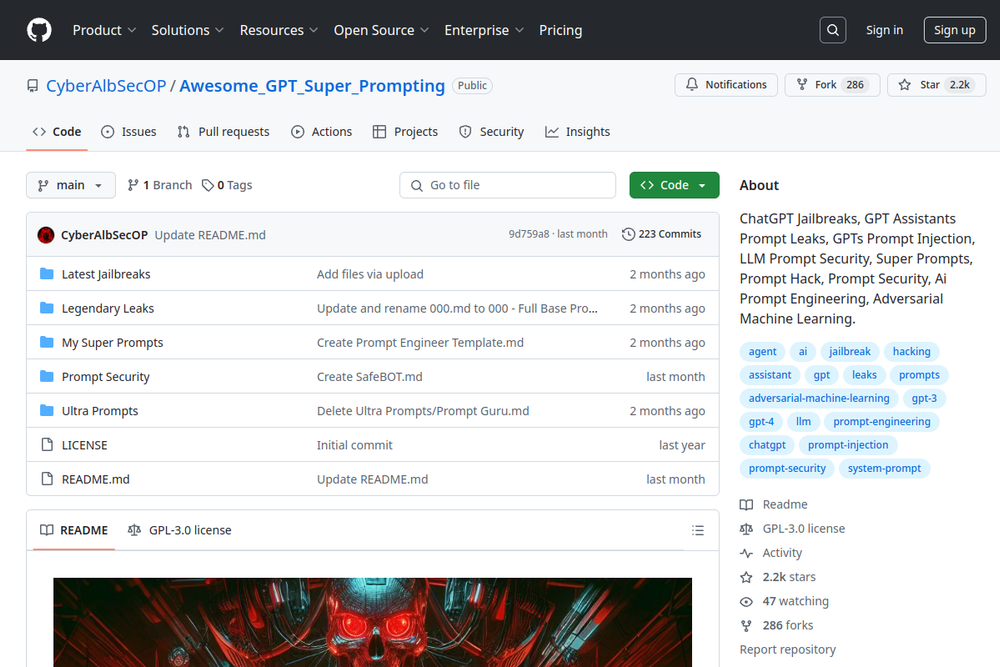

Explore ChatGPT jailbreaks, prompt leaks, injection techniques, and tools focused on LLM security and prompt engineering.

A collection of GPT system prompts and various prompt injection/leaking knowledge.

A comprehensive overview of prompt injection vulnerabilities and potential solutions in AI applications.

Explore Prompt Injection Attacks on AI Tools such as ChatGPT with techniques and mitigation strategies.

A blog discussing prompt injection vulnerabilities in large language models (LLMs) and their implications.

Explores security vulnerabilities in ChatGPT plugins, focusing on data exfiltration through markdown injections.

A resource for understanding prompt injection vulnerabilities in AI, including techniques and real-world examples.

the LLM vulnerability scanner that checks for weaknesses in large language models.

Aims to educate about security risks in deploying Large Language Models (LLMs).

This project investigates the security of large language models by classifying input prompts to discover malicious ones.