Official GitHub repository assessing prompt injection risks in user-designed GPTs.

Easy to use LLM Prompt Injection Detection / Detector Python Package.

Short list of indirect prompt injection attacks for OpenAI-based models.

Manual Prompt Injection / Red Teaming Tool for large language models.

Official implementation of StruQ, which defends against prompt injection attacks using structured queries.

The official implementation of InjecGuard, a tool for benchmarking and mitigating over-defense in prompt injection guardrail models.

Repo for the research paper "SecAlign: Defending Against Prompt Injection with Preference Optimization"

A benchmark for evaluating prompt injection detection systems.

Ultra-fast, low latency LLM security solution for prompt injection and jailbreak detection.

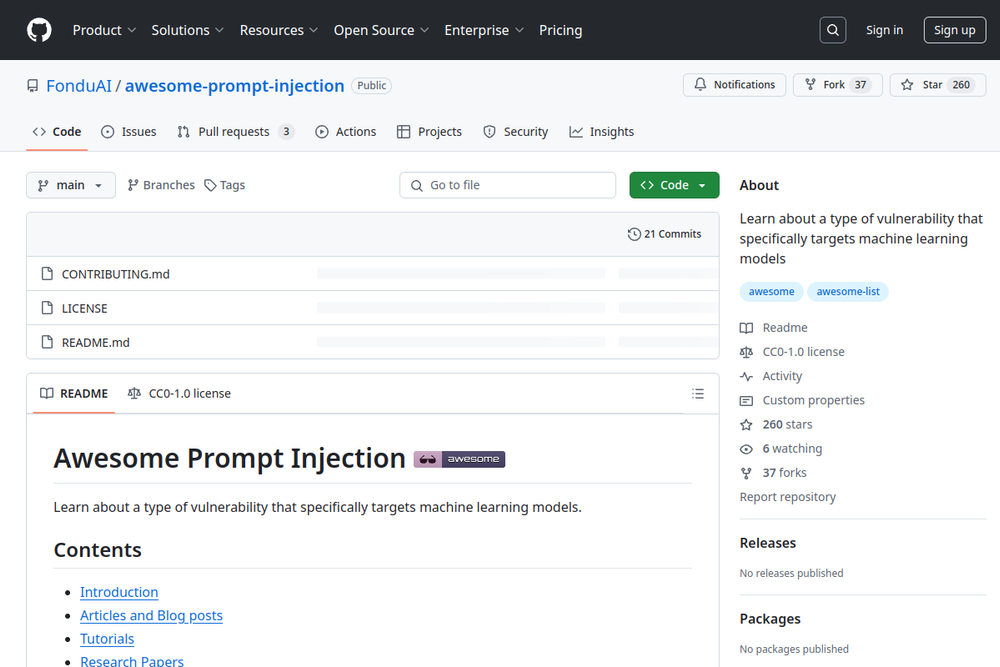

A GitHub repository showcasing various prompt injection techniques and defenses.

Learn about a type of vulnerability that specifically targets machine learning models.