A blog discussing prompt injection vulnerabilities in large language models (LLMs) and their implications.

Open-source tool by AIShield for AI model insights and vulnerability scans, securing the AI supply chain.

A plug-and-play AI red teaming toolkit to simulate adversarial attacks on machine learning models.

JailBench is a comprehensive Chinese dataset for assessing jailbreak attack risks in large language models.

A tool for optimizing prompts across various AI applications and security domains.

AI Prompt Generator and Optimizer that enhances prompt engineering for various AI applications.

the LLM vulnerability scanner that checks for weaknesses in large language models.

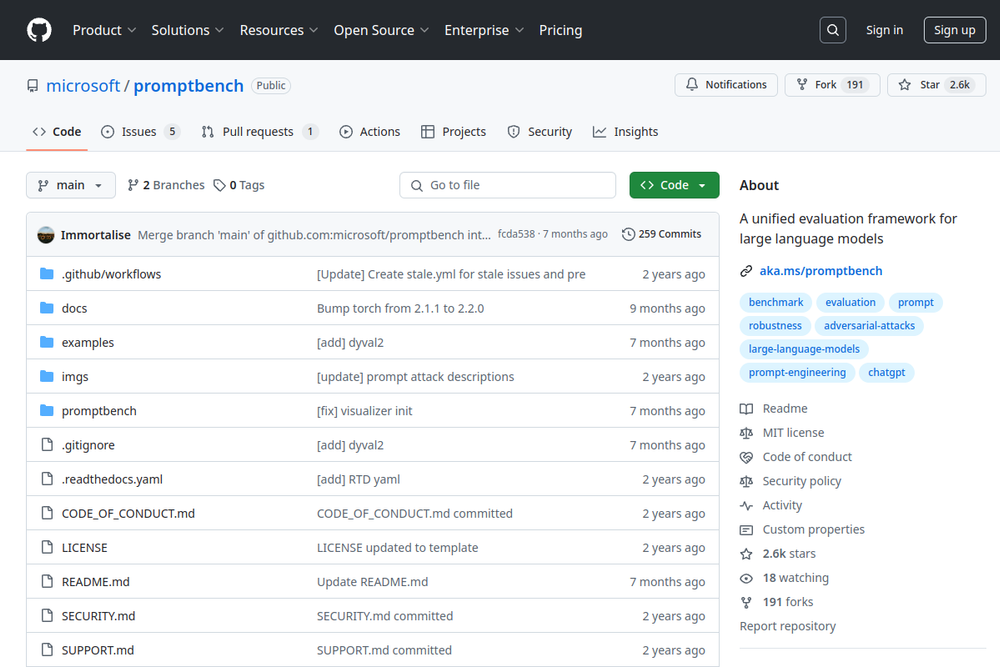

A unified evaluation framework for large language models.

Protect AI focuses on securing machine learning and AI applications with various open-source tools.

Test your prompting skills to make Gandalf reveal secret information.

Tips and tricks for working with Large Language Models like OpenAI's GPT-4.