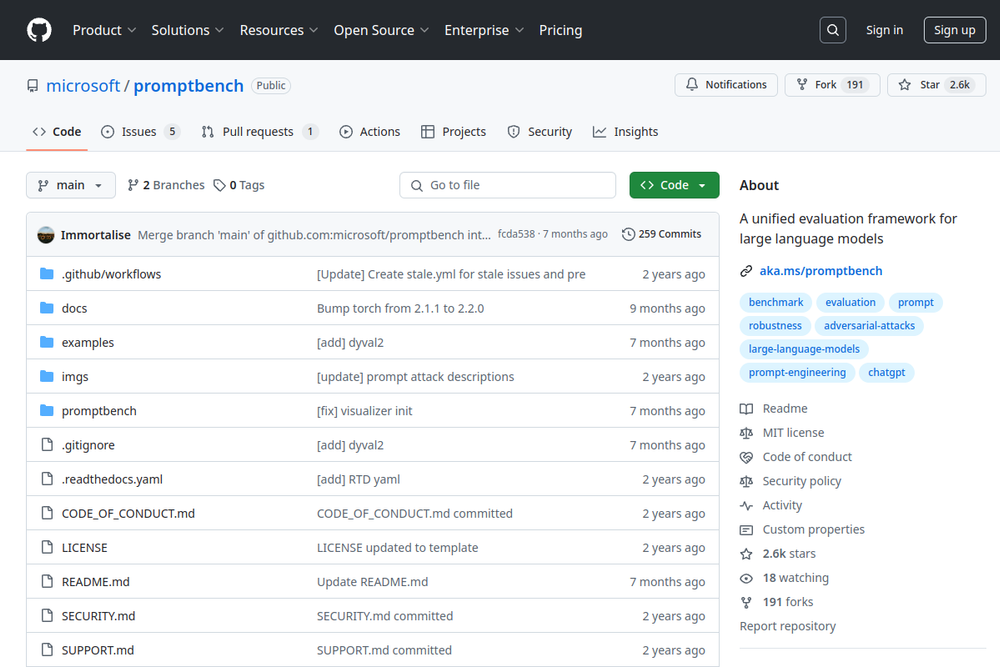

A unified evaluation framework for large language models.

Promptfoo is a local tool for testing LLM applications with security evaluations and performance comparisons.

Sample notebooks and prompts for evaluating large language models (LLMs) and generative AI.

Evals is a framework for evaluating LLMs and LLM systems, and an open-source registry of benchmarks.

Automatable GenAI Scripting for programmatically assembling prompts for LLMs using JavaScript.

Prompty simplifies the creation, management, debugging, and evaluation of LLM prompts for AI applications.

The open-source LLMOps platform: prompt playground, prompt management, LLM evaluation, and LLM observability all in one place.

Large Language Model in Action is a GitHub repository demonstrating various implementations and applications of large language models.

A Go implementation of the Model Context Protocol (MCP) for LLM applications.

A prompt management, versioning, testing, and evaluation inference server and UI toolkit, provider agnostic and OpenAI API compatible.

The AI framework that adds engineering to prompt engineering, compatible with multiple programming languages.